Unable to Login - "Your username or password may be incorrect or inactive at this time"

-

horizonxael✭✭I changed my password because I was convinced that they had hacked me and now I don't have access

0

0 -

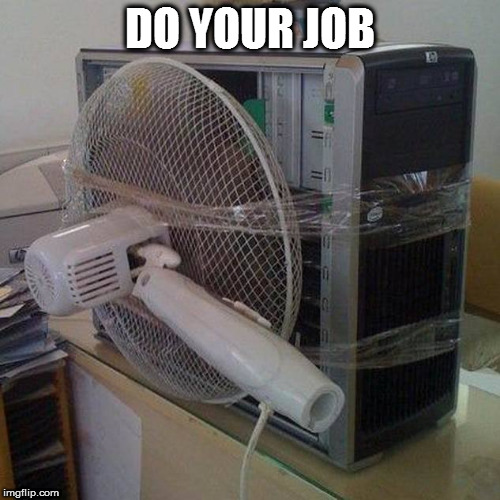

Allington✭✭Did they try:

1) Turning it off and on again

2) Hitting it

One of those usually work imo.

Cat in a hat.2 -

Kos✭✭✭I just started playing a few days ago on EU server after long break, and since I started there have been constant issues. So far I experienced super long loading screens after which I was usually returned to the starting point, a quest that bugged out ( enchanted boat in Stonefall), super crazy lags, and now I can't log in to the game or my account, I can't even open a support ticket.. I must have chosen the worst time to rejoin I suppose.

The graphics and sound track are great, and it is fun to play, but the technical issues are really spoiling it right now. I have only a couple hours at most a day after all day of work and when I finally can sit down and play I can't log in.

1 -

okiav✭✭✭Ragged_Claw wrote: »When ZoS go to take my ESO+ money:

'I am currently investigating issues some companies are having regarding taking subscription fees from my bank account. I will update as new information becomes available.'

you should make a separate thread by that name and would be awesome to see it closed by mods 1

1 -

mairwen85✭✭✭✭✭

✭✭✭✭Ragged_Claw wrote: »When ZoS go to take my ESO+ money:

'I am currently investigating issues some companies are having regarding taking subscription fees from my bank account. I will update as new information becomes available.'

you should make a separate thread by that name and would be awesome to see it closed by mods

Careful... you'll trigger a series of meme threads.1 -

Julia_Nix✭✭✭Grimreaper2000 wrote: »Unbelievable and unacceptable! Server issues are always possible but not 6 or more times in a few days.

Most people pay pay for it, are subscribed ... and all we we get are "we are investigating, thx for your patience".

Never heard of a compensation for paying members?!

"pay pay" "we we"

You ok bro? XD2 -

Trikie_Dik✭✭✭As for the comment between BCP and DR....

You do NOT roll to your Disaster Recovery instance for a tiny blip like loging issues - many of times would experience the same problem is there is deeper seeded integration issues. Not only do you need to ensure the DR env is setup with the latest copy of production, but you also need a plan to re-sync with production once the DR env is no longer needed.

For my line of work, which is in the ATM and Banking support industry, you will only roll to DR in the event of a total disaster and the current env will require days of work and not be suitable for quite some time. It must warrant not only the time to cut over, but also the time required to resync and cut back over to production once that is ready.

Long story short - we are wayyyy before a 'roll to DR' plan at this point

Even for a BCP plan... if your having integration issues between the app and the credential store, swapping over to a backup DB or app server will likly have the same issue.

Keep in mind all of that above is from my exposure to the companies i have worked for and a general industry standard there - however thats NOT a game thats primary intent is to provide entertainment. No one will die if they stay down for 2 hours, no one is locked in a vault or running out of air, etc..... give them soem time and lets hope they get a speedy recovery!5 -

Kadoin✭✭✭✭✭

✭✭I pay good money to play this game, I can only play so many hours a day as I'm in rehab so I have quite an extensive schedule while I'm in here, playing this for a couple of hours in the evening is a relief to the stress, this *** isn't f*cking helping, at least compensate, with something..

Hi, friend 1

1 -

Varana✭✭✭✭✭

✭✭✭Trikie_Dik wrote: »A typical SDLC will involve replication of the issue in dev/sandbox environment, packing up a deployment script that will contain the proposed fix AFTER creating the necessary backup files in case of a rollback, then testing that fix in the dev/sandbox instance. Once thats proven to be a fix, you need to test the roll back feature in case it wont preform the same in production, and then finally get all the approvals, checkoffs, user verification testing, etc before conducting a production rollout.

Hmm. Then maybe they tell us that stuff, not some guy on the internet (without doubting your expertise), and where they are in that process, instead of some vague "we're looking into some issue that some players might be having, kthxbye".

And yes, that is representing their communication in a slightly unfair manner, but they totally deserve that.3 -

wenchmore420b14_ESO✭✭✭✭✭

✭✭✭Yup! PTS also not working.... Wow... Everybody down!!Drakon Koryn~Oryndill, Rogue~Mage,- CP ~Doesn't matter any more

NA / PC Beta Member since Nov 2013

GM~Conclave-of-Shadows, EP Social Guild, ~Proud member of: The Wandering Merchants, Phoenix Rising, Imperial Trade Union & Celestials of Nirn

Sister Guilds with: Coroner's Report, Children of Skyrim, Sunshine Daydream, Tamriel Fisheries, Knights Arcanum and more

"Not All Who Wander are Lost"

#MOREHOUSINGSLOTS“When the people that can make the company more successful are sales and marketing people, they end up running the companies. The product people get driven out of the decision making forums, and the companies forget what it means to make great products.”

_Steve Jobs (The Lost Interview)0 -

Gex3meSoul ShrivenEvery day there's a new problem with this game. Everything is broken, just fire everyone and try again with a new team. This is just unacceptable!2

-

mairwen85✭✭✭✭✭

✭✭✭✭Trikie_Dik wrote: »As for the comment between BCP and DR....

You do NOT roll to your Disaster Recovery instance for a tiny blip like loging issues - many of times would experience the same problem is there is deeper seeded integration issues. Not only do you need to ensure the DR env is setup with the latest copy of production, but you also need a plan to re-sync with production once the DR env is no longer needed.

For my line of work, which is in the ATM and Banking support industry, you will only roll to DR in the event of a total disaster and the current env will require days of work and not be suitable for quite some time. It must warrant not only the time to cut over, but also the time required to resync and cut back over to production once that is ready.

Long story short - we are wayyyy before a 'roll to DR' plan at this point

Even for a BCP plan... if your having integration issues between the app and the credential store, swapping over to a backup DB or app server will likly have the same issue.

Keep in mind all of that above is from my exposure to the companies i have worked for and a general industry standard there - however thats NOT a game thats primary intent is to provide entertainment. No one will die if they stay down for 2 hours, no one is locked in a vault or running out of air, etc..... give them soem time and lets hope they get a speedy recovery!

OK.0 -

vestahls✭✭✭✭✭

✭Guess the performance improvements are going well. Game can't lag if it isn't working

“He is even worse than a n'wah. He is - may Vivec forgive me for uttering this word - a Hlaalu.”luv Abnur

luv Rigurt

luv Stibbons

'ate Ayrenn

'ate Razum-dar

'ate Khamira

simple as6 -

turlisley✭✭✭✭Can we go one day without ESO server stability/performance/queue/patch/loading/login problems? ONE DAY?!?! FFS.

@ZOS_GinaBruno you are the messenger and intermediary between ESO players and ZOS corporate suits.

Go tell your superiors to hire more capable Software Architects, Server Database Administrators and Information Technology professionals who can properly and effectively manage ESO's 'complex' system.

ZOS is a for-profit company, right? Then please stop holding the players hostage with insurmountable downtime that innumerous emergency maintenances, hot-fixes, and patches have been unable to fix in the past two weeks alone.

Also, please extend the Witchmother's Festival event, refund ESO+ subscriptions for this month, and make the Dragonhold/SouthernElsweyr DLC free because Northern Elsweyr was a cliffhanger and only one half of a chapter.Jeffrey Epstein didn't kill himself.ESO Platform/Region: PC/NA. ESO ID: @Turlisley10 -

Pyvos✭✭✭✭Seems like there is a datacenter outage in Netherlands at the moment from what I can tell by other games being affected (from what I'm seeing, GW2). If I had to guess, issues with login servers are probably around failing to read or write to nodes in those database centers, depending on the consistency and replication factors (consistency used for reads, replication for writes), so it's probably returning with failed reads or writes.

Just speculation of course based on experience, but it can happen with Apache Cassandra (and ScyllaDB) depending on replication and consistency factors.Edited by Pyvos on October 29, 2019 9:06PM10 -

imno007b14_ESO✭✭✭RodneyRegis wrote: »Hey guys - no need to keep telling us you have the same problem as everybody else has been reporting for 12 pages...

Sure there is: the aggravation of our constant gripes about will continue to aggravate someone who will continue to aggravate others to get it fixed asp. 2

2 -

Idinuse✭✭✭✭✭

✭✭Again? This is just....

Sed ut perspiciatis unde omnis iste natus error sit voluptatem accusantium dolorem que laudantium, totam rem aperiam, eaque ipsa quae ab illo inventore veritatis et quasi architecto beatae vitae dicta sunt explicabo. Nemo enim ipsam voluptatem quia voluptas sit aspernatur aut odit aut fugit, sed quia consequuntur magni dolores eos qui ratione voluptatem sequi nesciunt. Neque porro quisquam est, qui dolorem ipsum quia dolor sit amet, consectetur, adipisci velit, sed quia non numquam eius modi tempora incidunt ut labore et dolore magnam aliquam quaerat voluptatem. Ut enim ad minima veniam, quis nostrum exercitationem ullam corporis suscipit laboriosam, nisi ut aliquid ex ea commodi consequatur? Quis autem vel eum iure reprehenderit qui in ea voluptate velit esse quam nihil molestiae consequatur, vel illum qui dolorem eum fugiat quo voluptas nulla pariatur?3 -

Turgenev✭✭✭I just started playing a few days ago on EU server after long break, and since I started there have been constant issues. So far I experienced super long loading screens after which I was usually returned to the starting point, a quest that bugged out ( enchanted boat in Stonefall), super crazy lags, and now I can't log in to the game or my account, I can't even open a support ticket.. I must have chosen the worst time to rejoin I suppose.

The graphics and sound track are great, and it is fun to play, but the technical issues are really spoiling it right now. I have only a couple hours at most a day after all day of work and when I finally can sit down and play I can't log in.

I would not call myself an apologist, but I will say, as a regular user for the last 5 years, that this sort of technical issue is definitely not the norm. There may be sporadic issues once in awhile, but the last week has been exceptionally bad, which ZOS is aware of and they are attempting to address.

Don't let it discourage you, this is by far not a regular occurrence. We have plenty of other bugs to keep us busy :-)Turgenev || PS | COH | STO | TSW | ESOProud Member of IRON Phoenix"Remember. You chose this death." - Lucifer6 -

pluckpluck✭✭✭Trikie_Dik wrote: »(..)

At the same time, you have to look at it from the dev and ops point of view, and know they did not just make this issue for our inconvenience. Speaking from personal experience, when a large enterprise issue pops up its not always the easiest to fully diagnose and get down to the root cause. Even further, once you find said issue, the systems are so complex you cant just go throwing untested code fixes into production.

A typical SDLC will involve replication of the issue in dev/sandbox environment, packing up a deployment script that will contain the proposed fix AFTER creating the necessary backup files in case of a rollback, then testing that fix in the dev/sandbox instance. Once thats proven to be a fix, you need to test the roll back feature in case it wont preform the same in production, and then finally get all the approvals, checkoffs, user verification testing, etc before conducting a production rollout.

(..)

And til you corrected the issue, do you publish new content? do you push new patches not correcting previous issues but giving new ones? As a coder, my point of view is : you have an issue, you fix it before anything else. You don't report the issue for 6+ months where you won't get anymore what is going on in your code. Period.

Your defense is good, but ZoS does everything wrong when it's about addressing issues.

Each event, there is a patch with new content that will make crash the game/servers. While not solving previous bugs, and even bringing new ones in zones that should stay untouched by these patches."The net is a waste of time and that's exactly what's right about it. "

-- W. Gibson3 -

SipofMaim✭✭✭✭If ZOS has an in-house saboteur, he's very good.

I keep revising my expectations downward, but this is shaping up like a good patch to do something else with my gaming time. What a circus.2 -

Palidon✭✭✭✭✭

✭ZOS you need to fix your broken game. There are no more excuses. Not being able to log on, long load screens, disconnects etc have s been going on way to long. The never ending story. New DLC or expansion and more crap players have to deal with.1 -

DrOuttaSight✭✭✭RefLiberty wrote: »

I always use Bold, so that my posts stand out from the multitude of other posts in the thread

I guess I could use bold, and italic next time Forums are for........... venting/letting off steam

Forums are for........... venting/letting off steam

Feed the needy Not the greedy

In Virtual Space No One Can Hear You Scream!!!

PC EU Clockwork Server2

This discussion has been closed.